sqoop - How Sqoop Works - apache sqoop - sqoop tutorial - sqoop hadoop

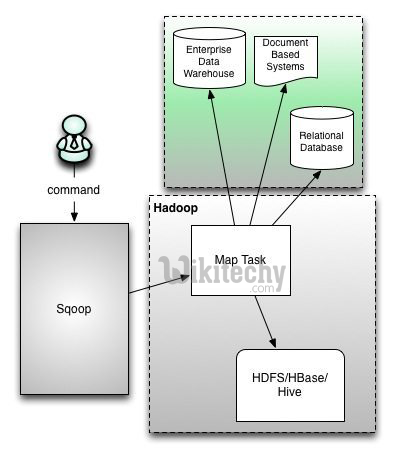

How Sqoop Works?

- Sqoop provides a pluggable mechanism for optimal connectivity to external systems.

- The Sqoop extension API provides a convenient framework for building new connectors which can be dropped into Sqoop installations to provide connectivity to various systems.

- Sqoop itself comes bundled with various connectors that can be used for popular database and data warehousing systems.

Learn sqoop - sqoop tutorial - sqoop illustration - sqoop examples - sqoop programs

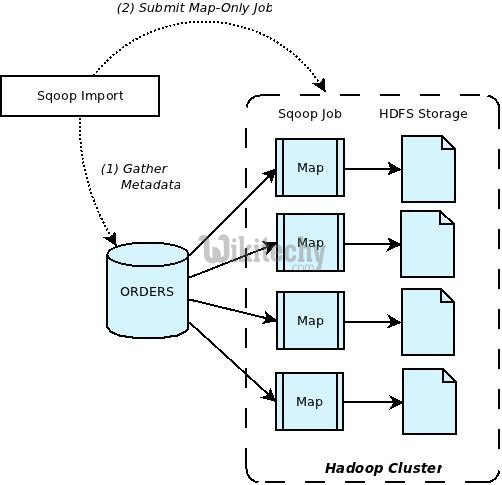

The above diagram explains how sqoop uses the import command internally to retrieve data from other databases.

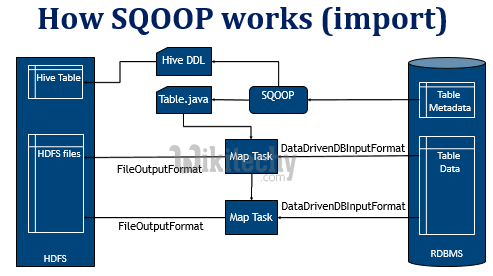

Import Data:

Command used for importing data (Employee table) from other MYSQL databases:

sqoop import -connect jdbc:mysql://localhost:3396/din -table Employee -username dinesh -password ****

Click "Copy code" button to copy into clipboard - By wikitechy - sqoop tutorial - team

- import: command that tells Sqoop to initiate an import.

- connect <connect string>, -username <user name >, -password <password >: connection parameters used to connect with the database.

- table <table name> : table to be imported.

Learn sqoop - sqoop tutorial - how sqoop works - sqoop examples - sqoop programs

Step 1

- sqoop analyses database and frames metadata in order for the data to be retrieved.

Step 2

- In the next step it uses map job to transfer data to HDFS using the metadata formed in the first step.

- Data will be imported to the HDFS system under a file related to the retrieved table.

- Sqoop also provides features to specify the file name in the HDFS system.

Step 3

- The file created by HDFS will have comma separated values for each column and each row will be placed in a new line.

- Based on the size of the table, HDFS may create one or more files. Sqoop provisions various format for importing data, for eg: using option -as -avrodatafile will import in avro format.

Sqoop related tags : sqoop import , sqoop interview questions , sqoop export , sqoop commands , sqoop user guide , sqoop documentation

Export Data:

- The Export Command used for exporting data (Employee table) to MYSQL database:

sqoop export -connect jdbc:mysql://localhost:3396/din -table Employee -username dinesh -password **** -export-dir /user/din/Employee

Click "Copy code" button to copy into clipboard - By wikitechy - sqoop tutorial - team

- export: command that tells Sqoop to initiate an export.

- connect <connect string>, -username <user name >, -password <password >: connection parameters used to connect with the database.

- table <table name>: table to be loaded with data.

- export-dir <directory path>: HDFS directory from where data will be exported.

- sqoop gathers meta data for which it should export data and then it performs a map job which transfers data.

- Sqoop performs this transfer by splitting the input data set into various map tasks and sends it to the database.

- Map job implements this transfer over several transactions to ensure optimal throughput and minimal resource utilization.

- Some of the connectors in sqoop facilitate a staging table concept where the data set will be transferred to a staging table first and then to a target table.

- This prevents data loss in case of a failure so the job can be repeated again if there is a failure.

How to run sqoop :

Sqoop TOOL PROPERTY_ARGS SQOOP_ARGSClick "Copy code" button to copy into clipboard - By wikitechy - sqoop tutorial - team

- TOOL - indicates the operation that you want to perform, e.g import, export etc

- PROPERTY_ARGS - are a set of parameters that are entered as

- Java properties in the format -Dname=value. SQOOP_ARGS - all the various sqoop parameters.

Sqoop – How to run sqoop

sqoop import \

--connect jdbc:oracle:thin:@devdb11-s.cern.ch:10121/devdb11_s.cern.ch \

--username hadoop_tutorial \

-P \

--num-mappers 1 \

--target-dir visitcount_rfidlog \

--table VISITCOUNT.RFIDLOG

Click "Copy code" button to copy into clipboard - By wikitechy - sqoop tutorial - team

Sqoop – how to parallelize :

-- table table_name

-- query select * from table_name where $CONDITIONS

-- table table_name

-- split-by primary key

-- num-mappers n

-- table table_name

-- split-by primary key

-- boundary-query select range from dual

-- num-mappers n