What is Deep Learning - Deep Learning Tutorial

Deep Learning Introduction

- Deep learning is a subset of Artificial Intelligence, which is based on the branch of machine learning.

- In deep learning, nothing is programmed explicitly.

- It is a machine learning class which makes use of numerous nonlinear processing units so as to perform feature extraction as well as transformation.

- From each proceeding layer output is taken as input by each one of the successive layers.

- Deep learning models are very helpful in solving out the problem of dimensionality and capable enough to focus on the accurate features themselves by requiring a little guidance from the programmer.

- Especially when we have a huge no of inputs and outputs, deep learning algorithms are used.

- It is implemented with the help of Neural Networks, and the idea behind the motivation of Neural Network.

Deep Learning

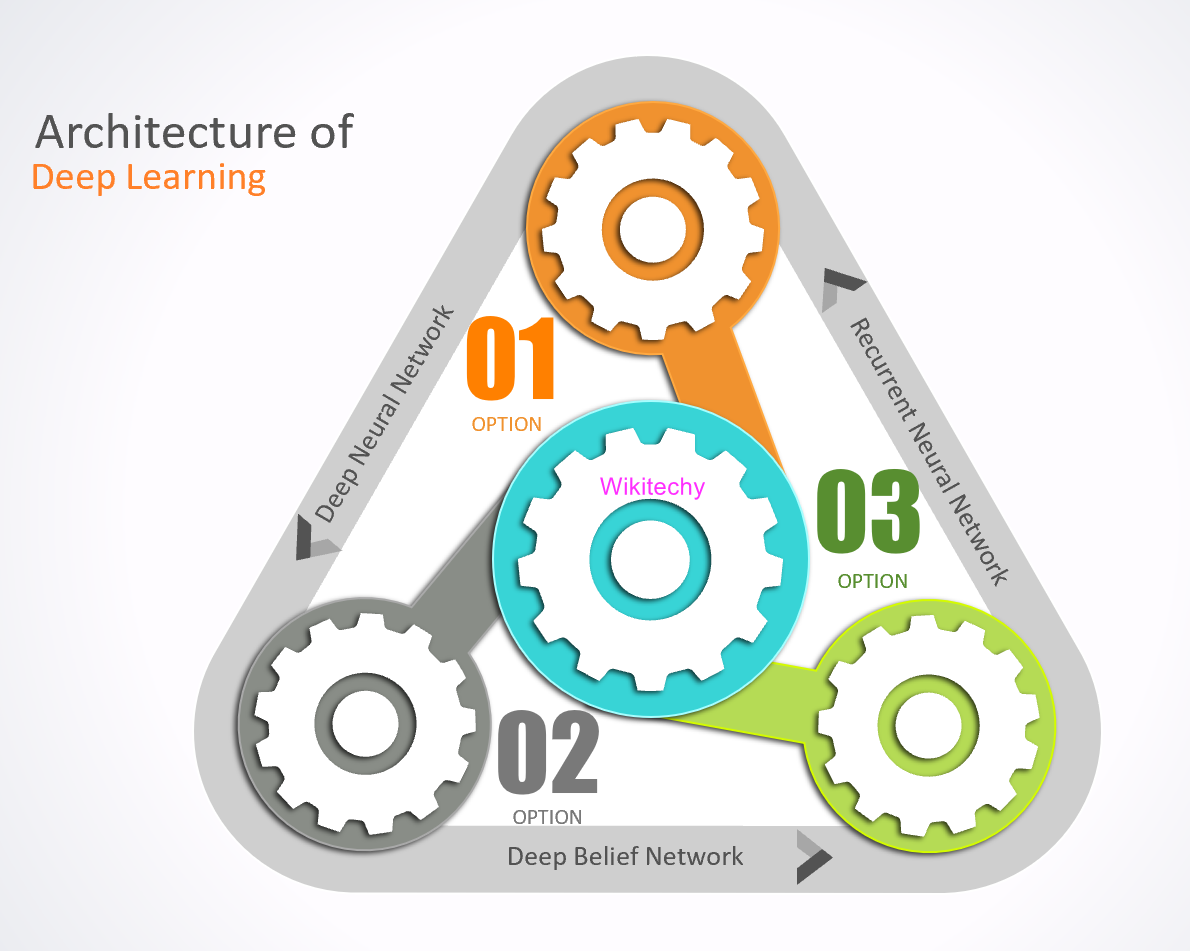

Architectures of Deep Learning

Architectures of Deep Learning

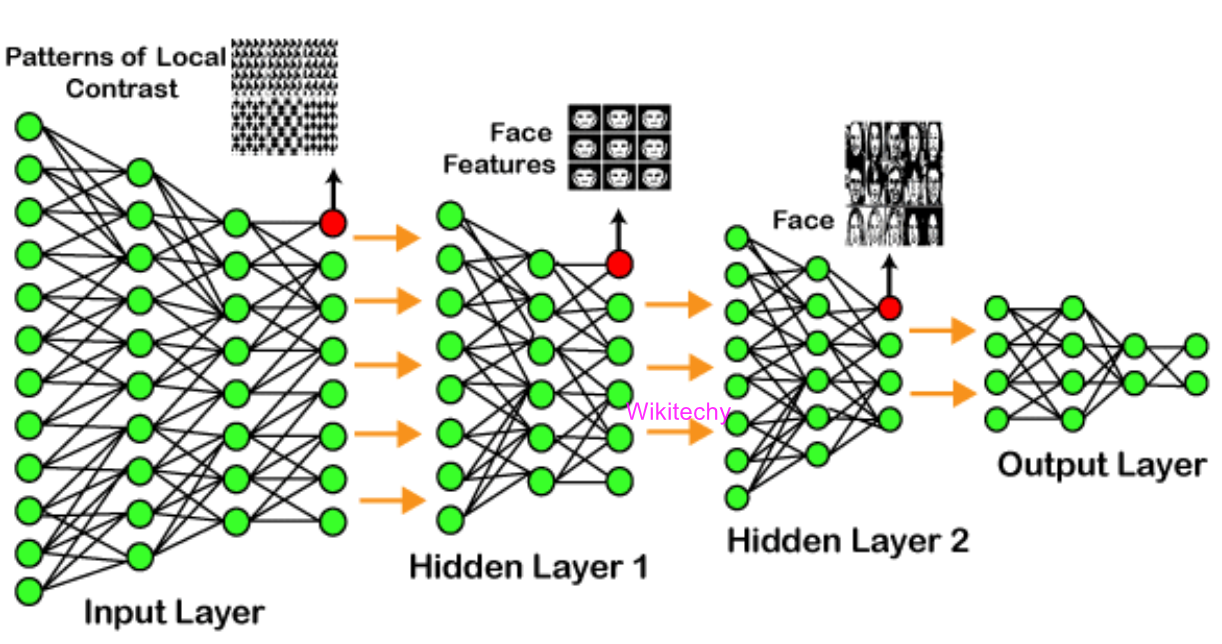

Deep Neural Network

- It is a neural network that means several numbers of hidden layers are encompassed in between the input and output layers, which incorporates the complexity of a certain level.

- They are highly proficient on model and process non-linear associations.

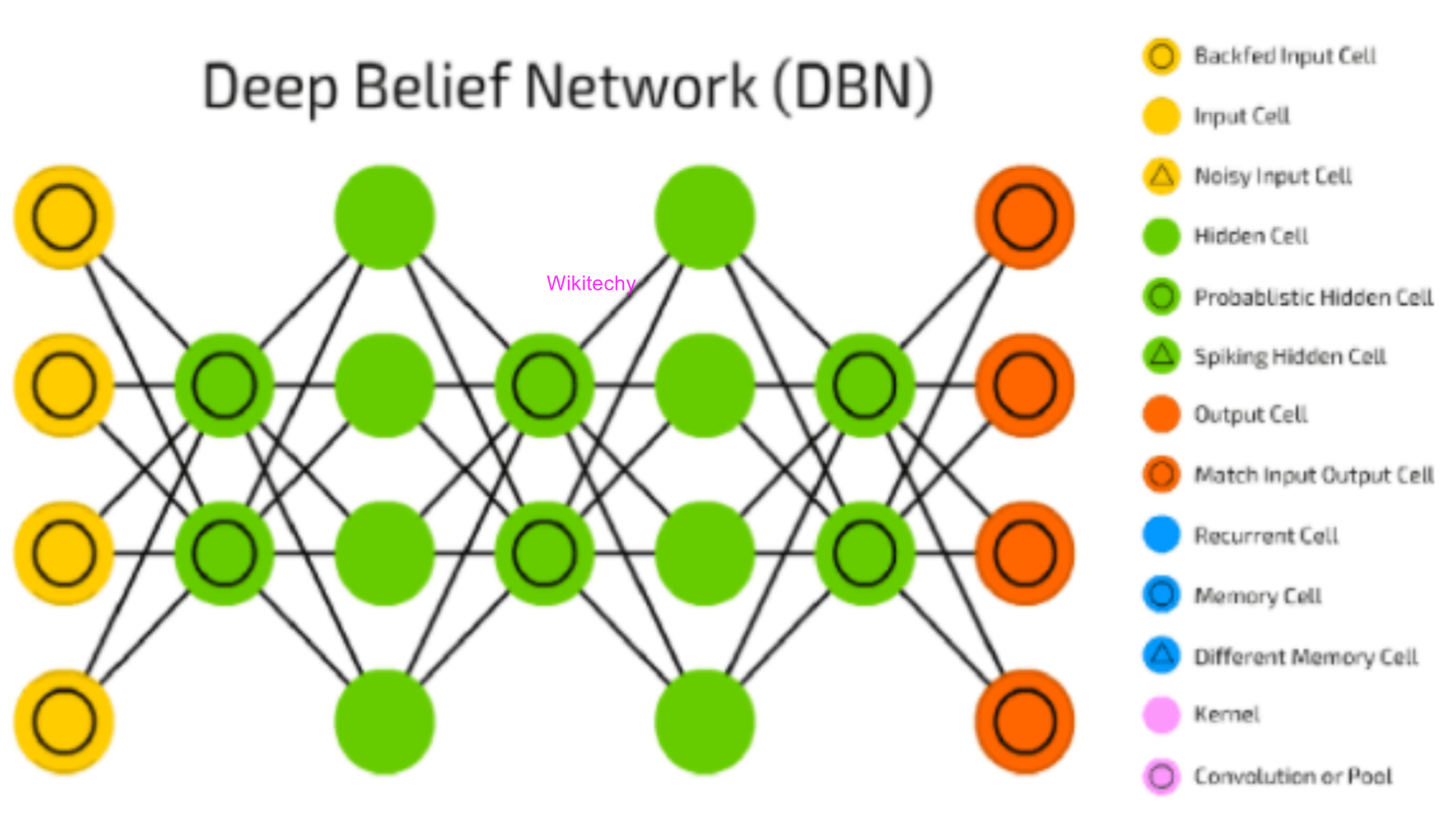

Deep Belief Network

- It is a class of Deep Neural Network that comprises of multi-layer belief networks.

- A layer of features is learned from perceptible units, with the help the Contrastive Divergence algorithm.

- Then, the formerly trained features are treated as visible units, which perform learning of features.

- At last, if the learning of the final hidden layer is accomplished, then the whole DBN is trained.

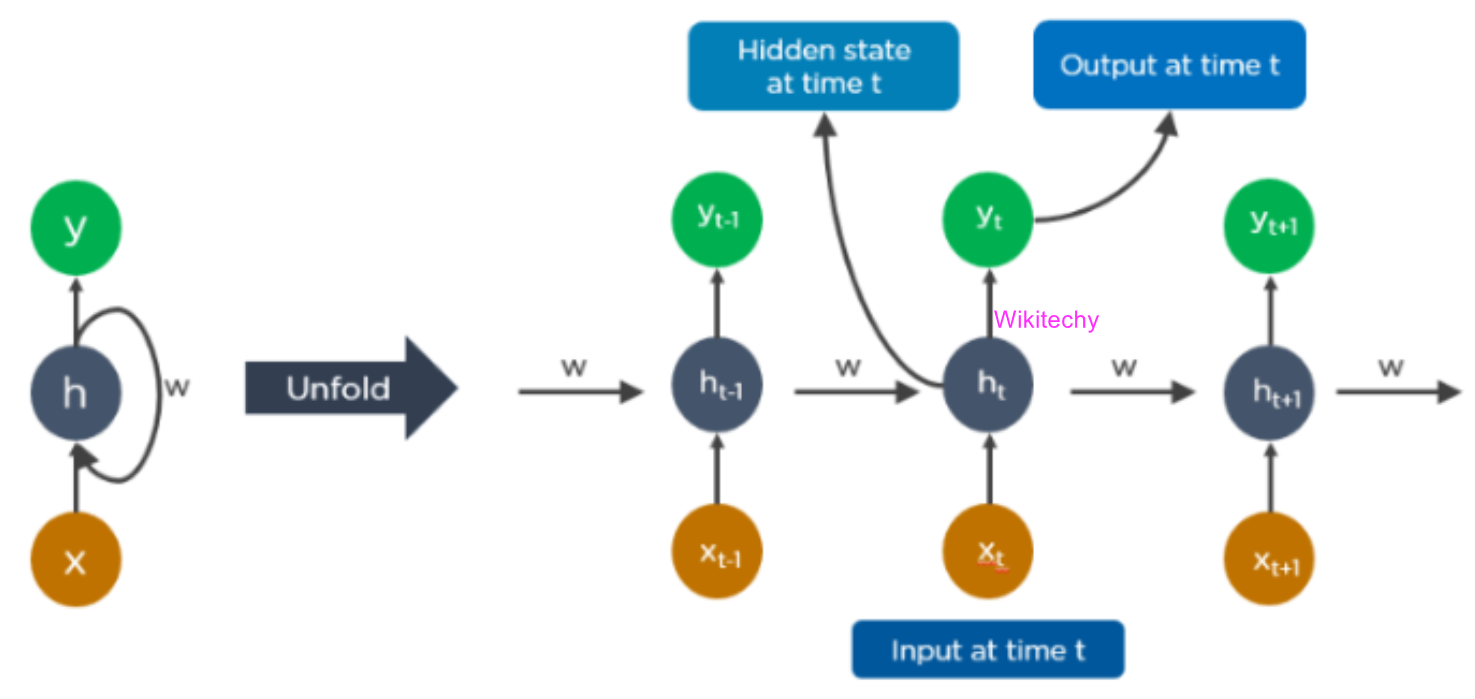

Recurrent Neural Networks

- It is exactly similar to that of the human brain large feedback network of connected neurons and permits parallel as well as sequential computation.

- They are capable enough to reminisce all of the imperative things related to the input they have received, so they are more precise.

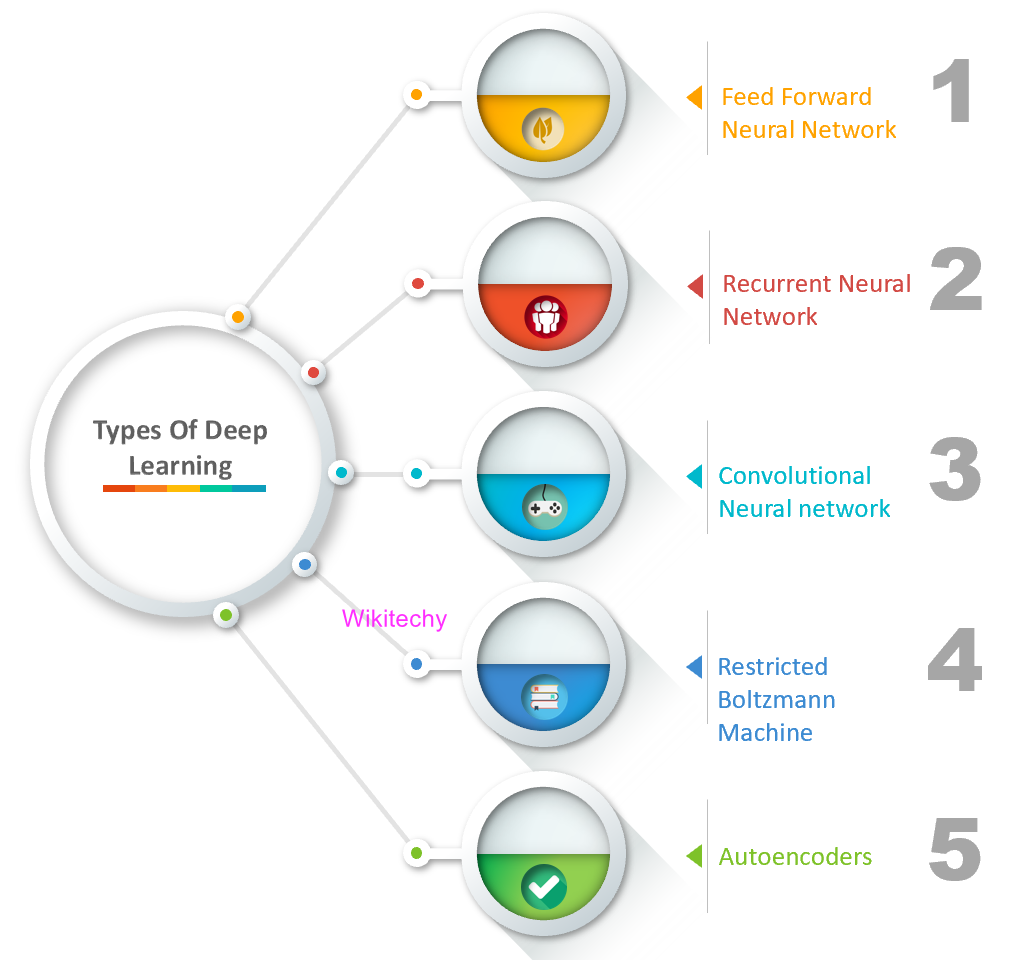

Types of Deep Learning Networks

Types of Deep Learning

Feed Forward Neural Network

- It is none other than Artificial Neural Network that ensures the nodes do not form a cycle.

- In Feed Forward Neural Network, all the perceptron’s are organized within layers, such that the input layer takes the input, and the output layer generates the output.

- The hidden layers do not link with the outside world, it is named as hidden layers.

- In one single layer each of perceptron’s contained and associated with each node in the subsequent layer.

- At same layer it does not contain any visible or invisible connection between the nodes.

- In Feed Forward Neural Network there are no back-loops and it can be concluded that all of the nodes are fully connected.

- The backpropagation algorithm can be used to update the weight values, to minimize the prediction error.

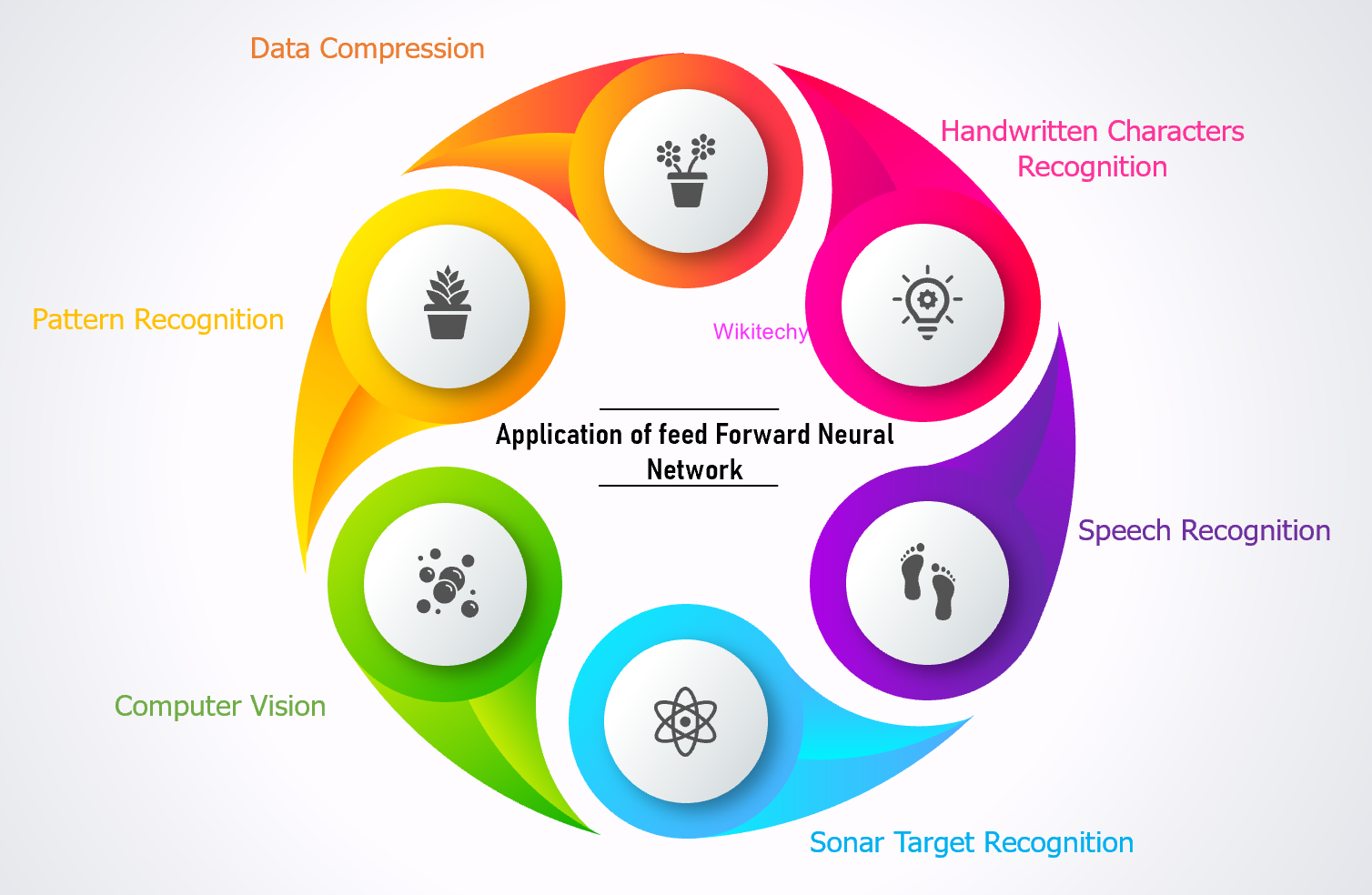

Applications of Feed Forward Neural Network

Applications of Feed Forward Neural Network

Recurrent Neural Network

- It is another variation of feed-forward networks and here each of the neurons present in the hidden layers receives an input with a specific delay in time.

- It is mainly accessing the preceding info of existing iterations.

- It not only processes the inputs but also shares the length as well as weights crossways time.

- It does not let the size of the model to increase with the increase at the input size.

- It has slowed computational speed as well as it does not contemplate any future input for the current state and has a problem with reminiscing prior information.

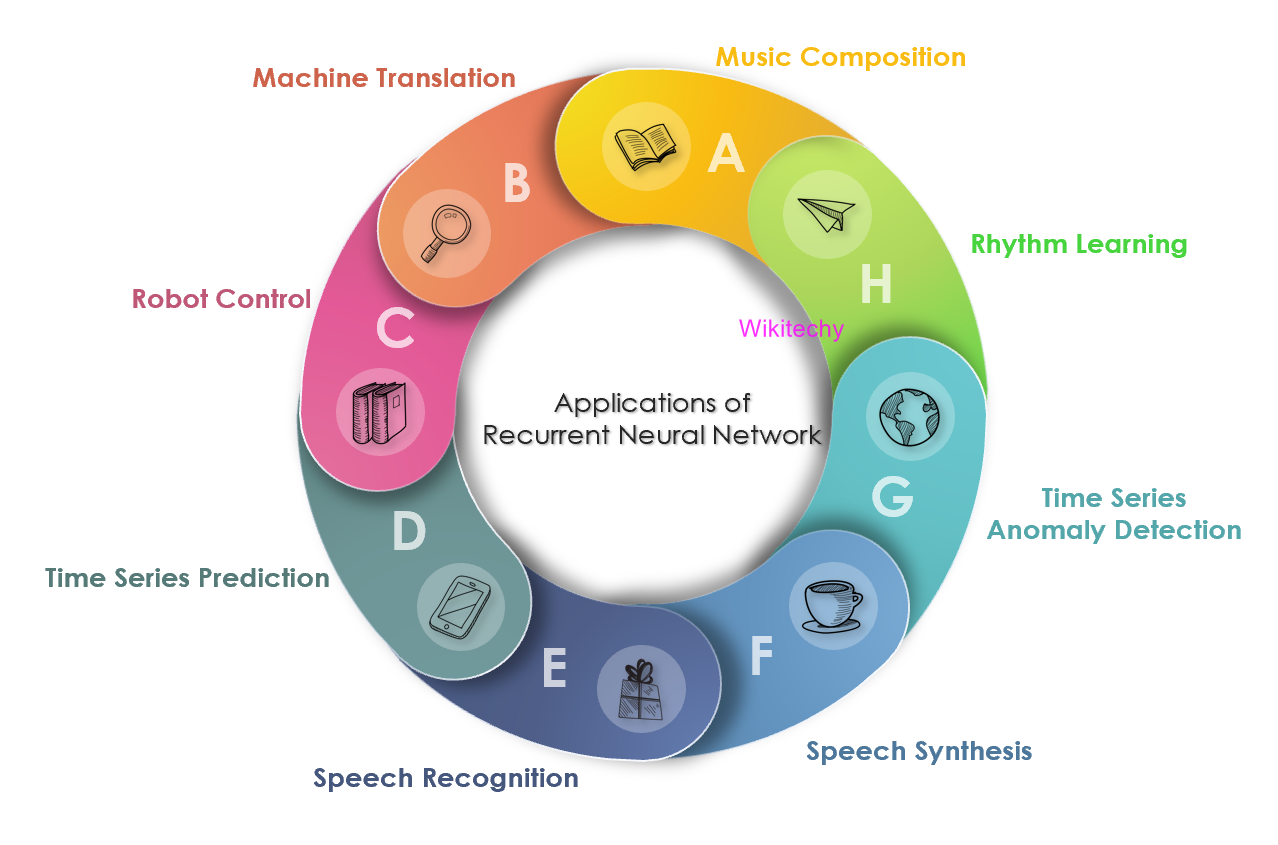

Applications of Recurrent Neural Network

Convolutional Neural Network

- It is a special kind of neural network mainly used for clustering of images, object recognition and image classification.

- Deep Neural Network enables an unsupervised construction of hierarchical image representations.

- Deep convolutional neural networks are preferred more than any other neural network, to achieve best accuracy.

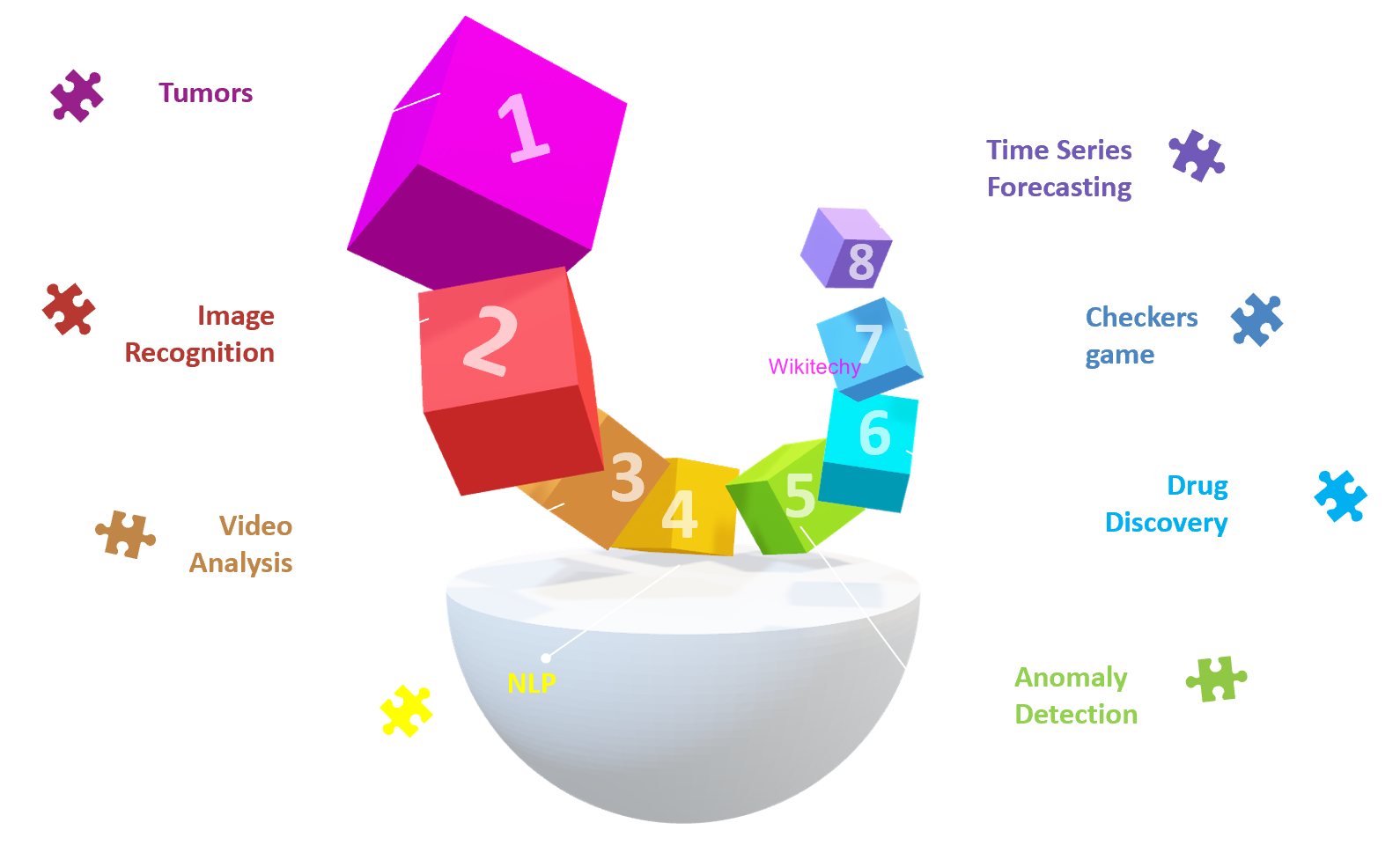

Applications of Convolutional Neural Network

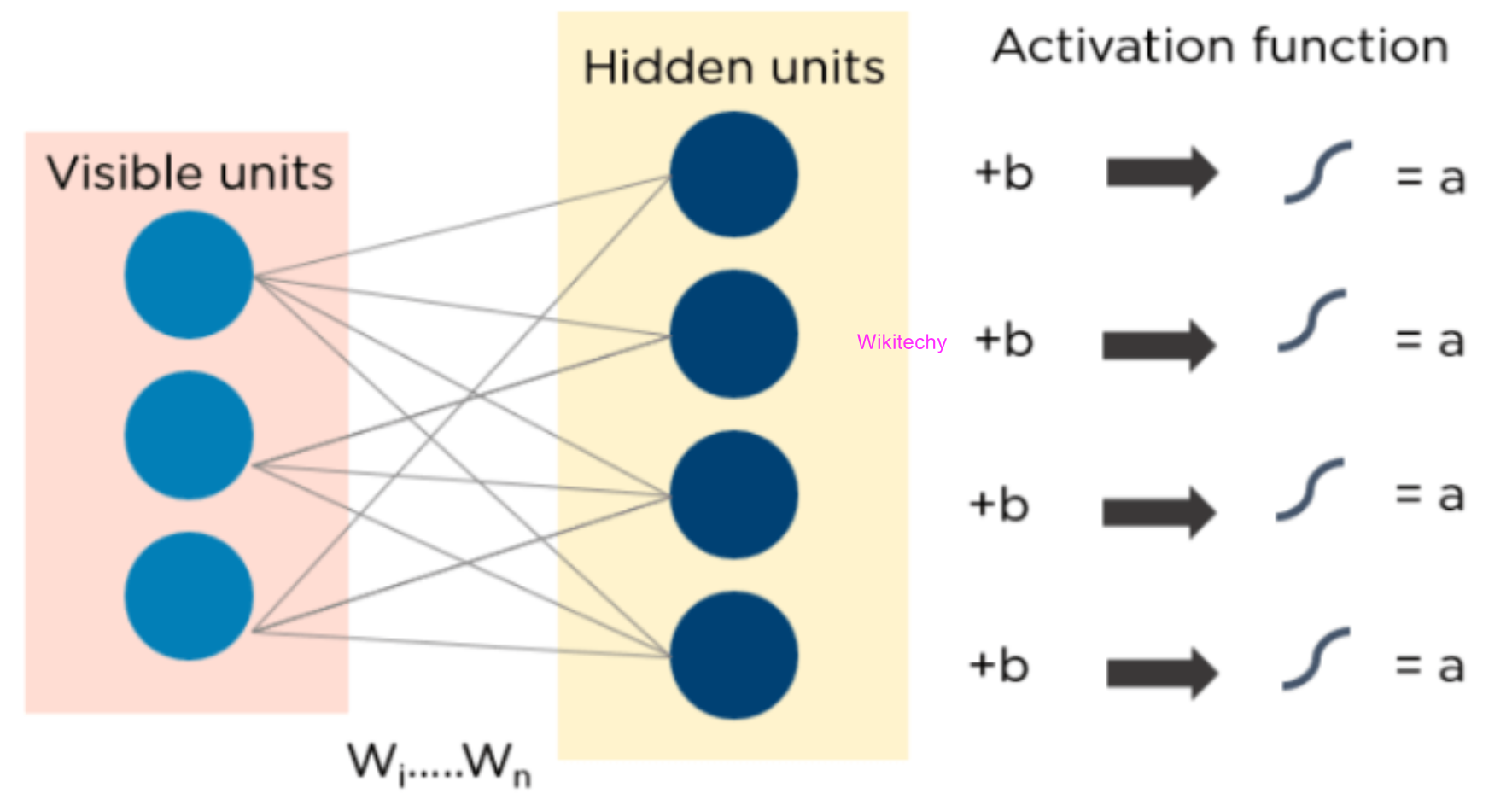

Restricted Boltzmann Machine

- Restricted Boltzmann Machine are yet another variant of Boltzmann Machines where the neurons present in the input layer and the hidden layer encompasses symmetric connections amid them.

- Here, there is no internal association within the respective layer.

- Boltzmann machines do encompass internal connections inside the hidden layer in contrast to RBM.

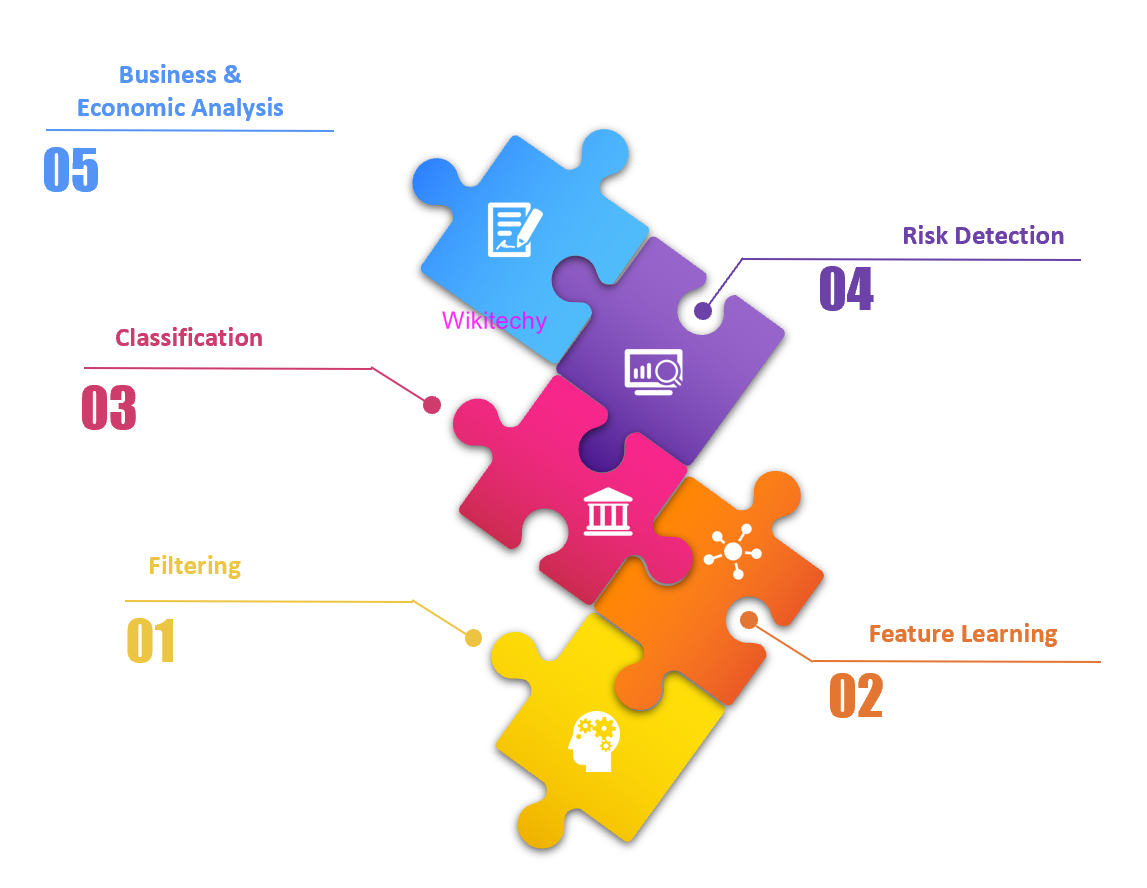

Applications of Restricted Boltzmann Machine

Applications of Restricted Boltzmann Machine

Autoencoders

- An autoencoder neural network is another kind of unsupervised machine learning algorithm where the number of hidden cells is merely small than that of the input cells.

- Here the number of input cells is equivalent to the number of output cells.

- It is trained to display the output similar to the fed input to force AEs to find common patterns and generalize the data.

- The autoencoders are mainly used for the smaller representation of the input and helps in the reconstruction of the original data from compressed data.

- This algorithm is comparatively simple as it only necessitates the output identical to the input.

- The encoder converts input data in lower dimensions and decoder reconstruct the compressed data.

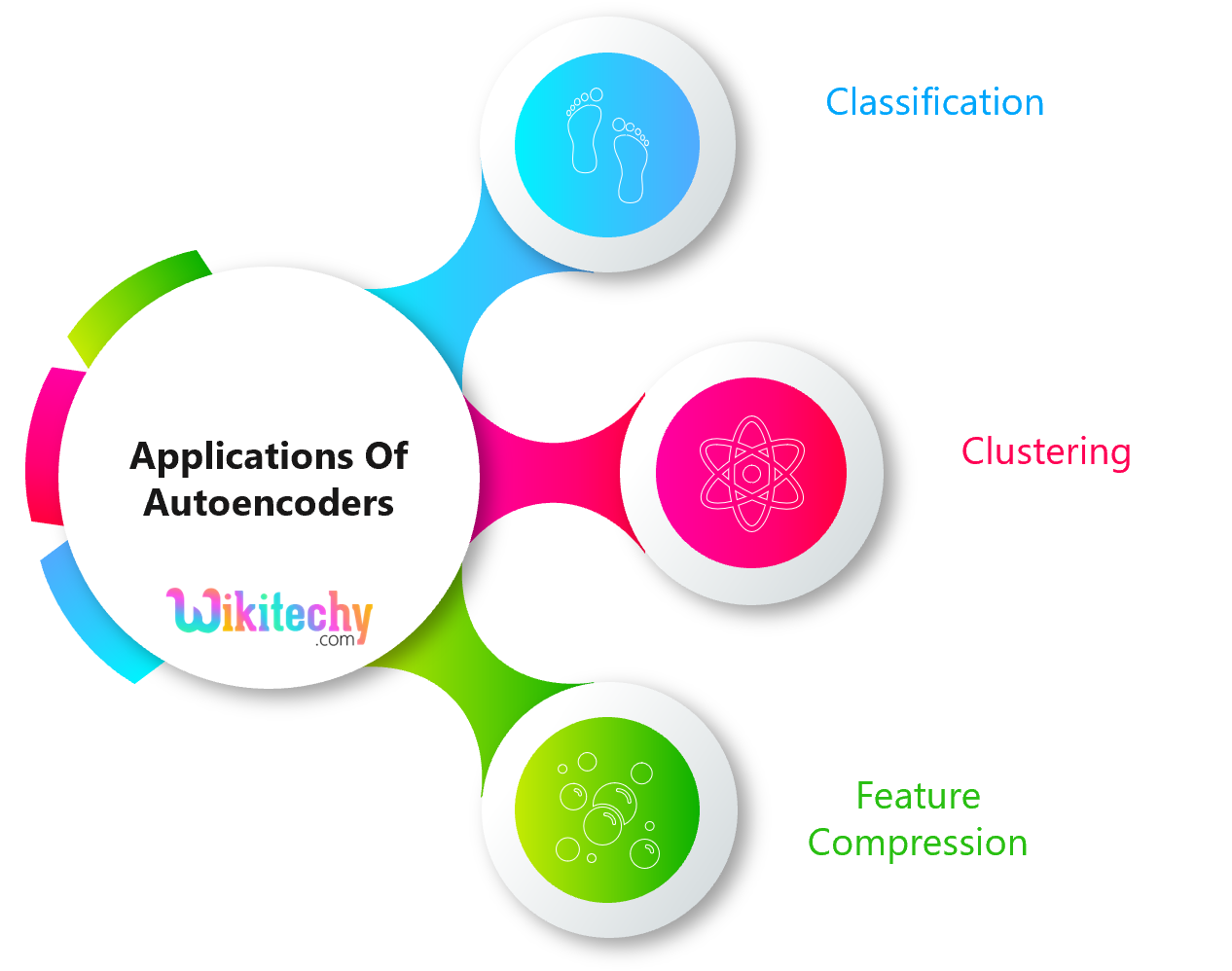

Applications of Autoencoders

Applications of Autoencoders

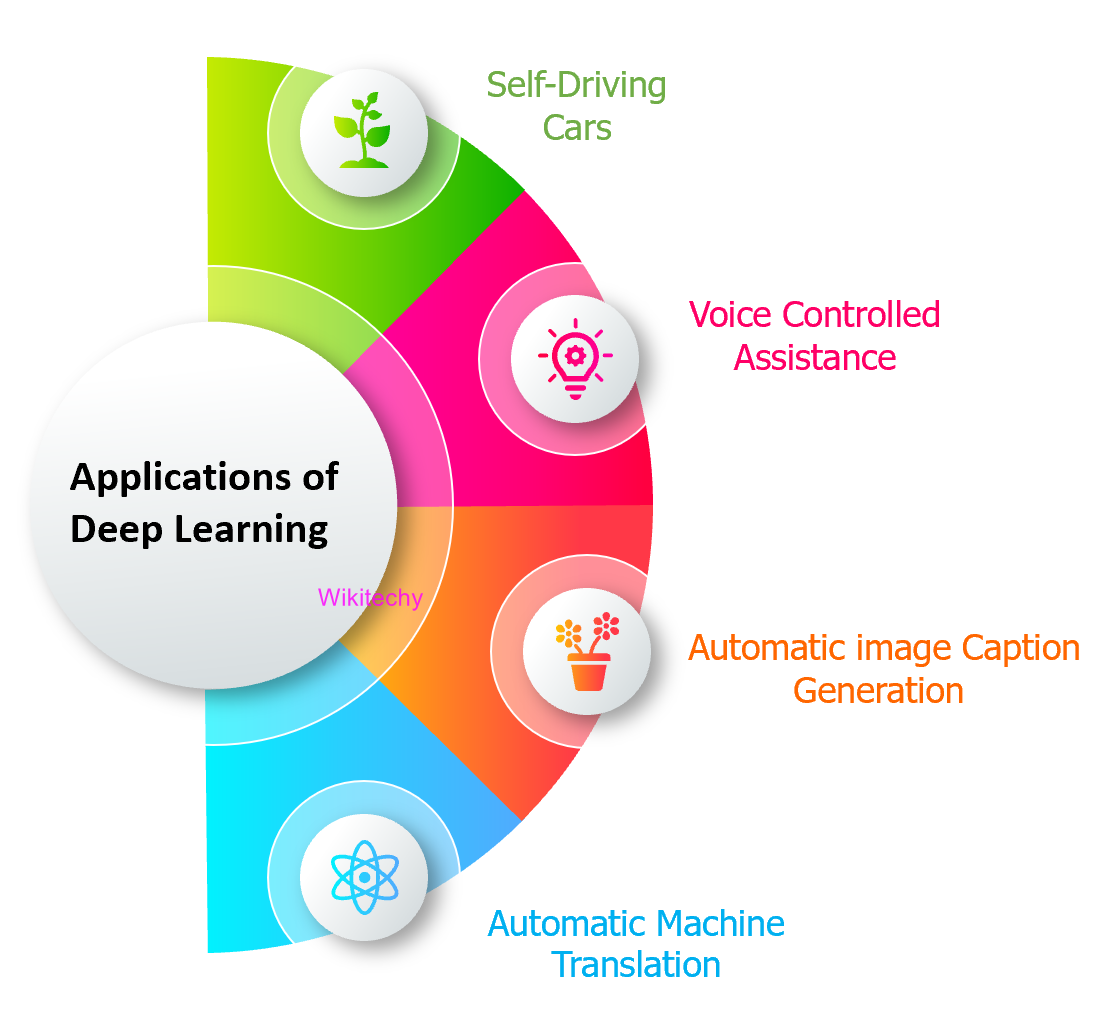

Applications of Deep Learning

Applications of Deep Learning

Self-Driving Cars

- It is able to capture the images around it by processing a huge amount of data, at self-driven cars.

- Then it will decide which actions should be incorporated to take a right or left or should it stop.

- Accordingly, it will decide what actions it should take, which will further reduce the accidents that happen every year.

Voice Controlled Assistance

- Siri is the one thing that comes into our mind, while we talk about voice control assistance.

- So, we can tell Siri whatever we want it to do it for us, and it will search and display it for us.

Automatic Image Caption Generation

- Whatever we upload the image, algorithm will work in such a way that it will generate caption accordingly.

- If we say brown colored eye, it will display a brown-colored eye with a caption at the bottom of the image.

Automatic Machine Translation

- We are able to convert one language into another with the help of deep learning, with the help of automatic machine translation.

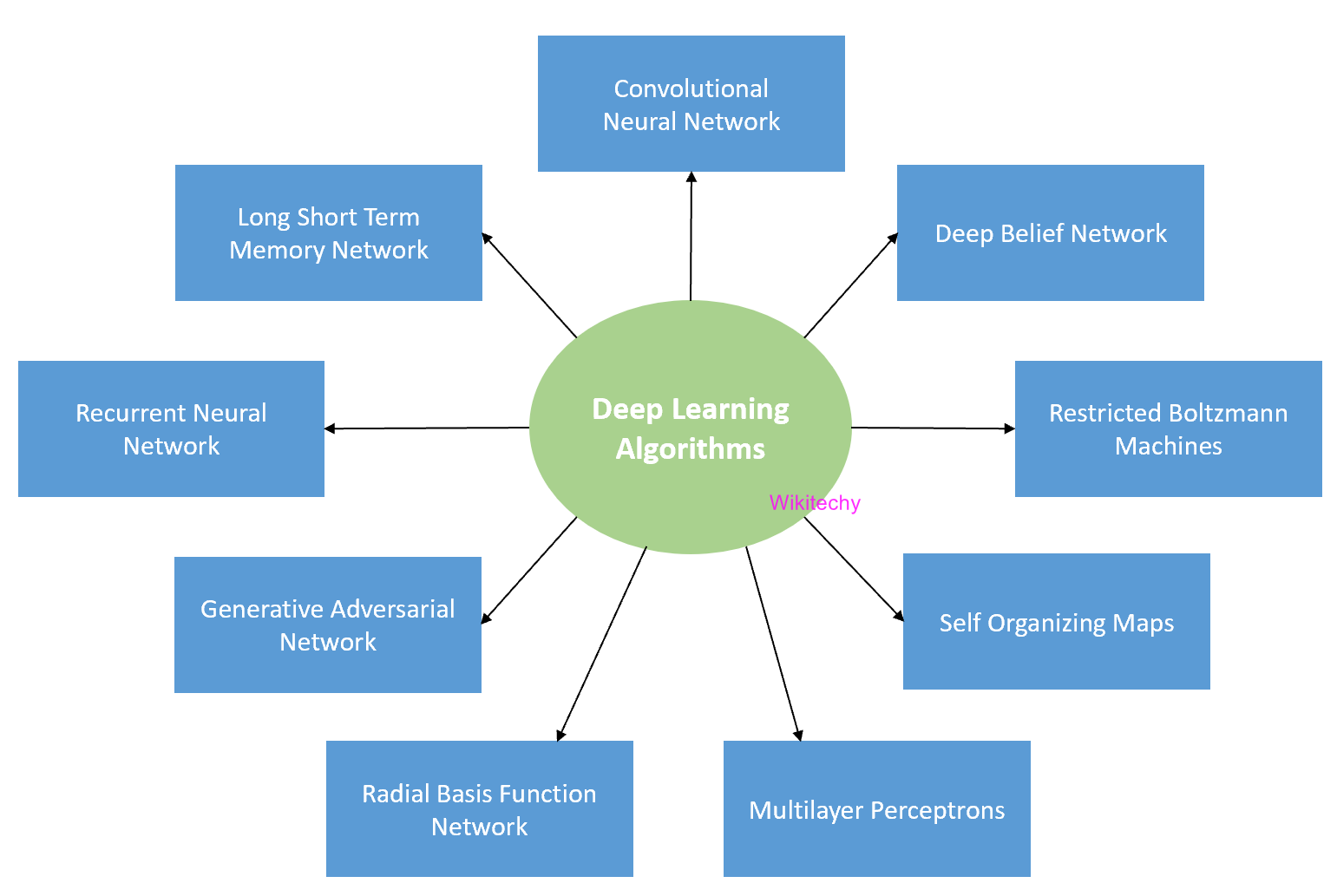

Deep Learning Algorithms

Deep Learning Algorithms

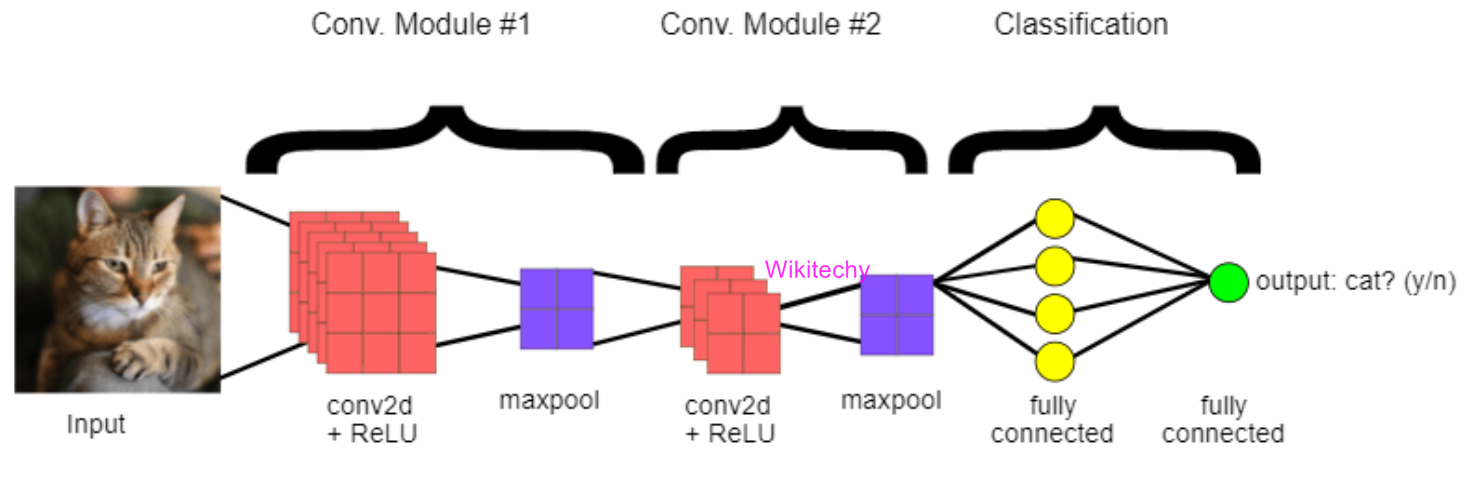

Convolutional Neural Network

- Convolutional Neural Network was developed to recognize digits and zip code characters and have wide usage in identifying the image of the satellites, series forecasting, anomaly detection and medical image processing.

- It processes the data by passing it through multiple layers and extracting features to exhibit convolutional operations.

- Convolutional layer consists of Rectified Linear Unit (ReLu) that outlasts to rectify the feature map and Pooling layer is used to rectify these feature maps into the next feed.

- Pooling reduces the dimensions of the feature map and it is generally a sampling algorithm that is down-sampled.

- At last, the result generated consists of 2-D arrays consisting of single, long, continuous, and linear vector flattened in the map.

Convolutional Neural Network

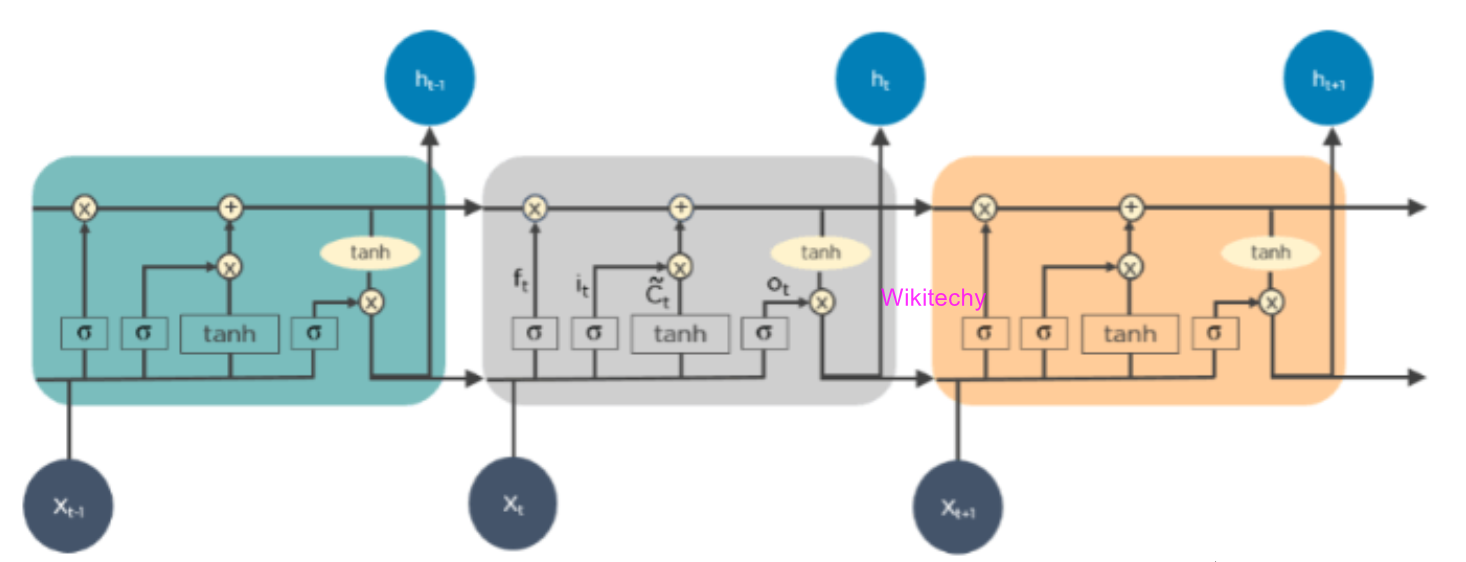

Long Short-Term Memory Networks (LSTMs)

- Long Short-Term Memory Networks can be defined as Recurrent Neural Networks (RNN) that are programmed to learn and adapt for dependencies for the long term.

- It can memorize and recall past data for a greater period and by default, it is its sole behavior.

- They are designed retain over time and henceforth they are majorly used in time series predictions because they can restrain memory or previous inputs.

- The analogy comes from the chain-like structure consisting of four interacting layers that communicate with each other differently.

- Besides applications of time series prediction, they can be used to construct development in pharmaceuticals, speech recognizers and composition of music loops as well.

Long Short-Term Memory Networks (LSTMs)

Recurrent Neural Networks (RNNs)

- Recurrent Neural Network consist of some directed connections that form a cycle that allow the input provided from the LSTMs to be used as input in the current phase of RNNs.

- In internal memory these inputs are deeply embedded as inputs and enforce the memorization ability of LSTMs lets these inputs get absorbed for a period.

- RNNs are therefore dependent on the inputs that are preserved by LSTMs and work under the synchronization phenomenon of LSTMs.

- It is mostly used in captioning the image, time series analysis, recognizing handwritten data, and translating data to machines.

Recurrent Neural Networks (RNNs)

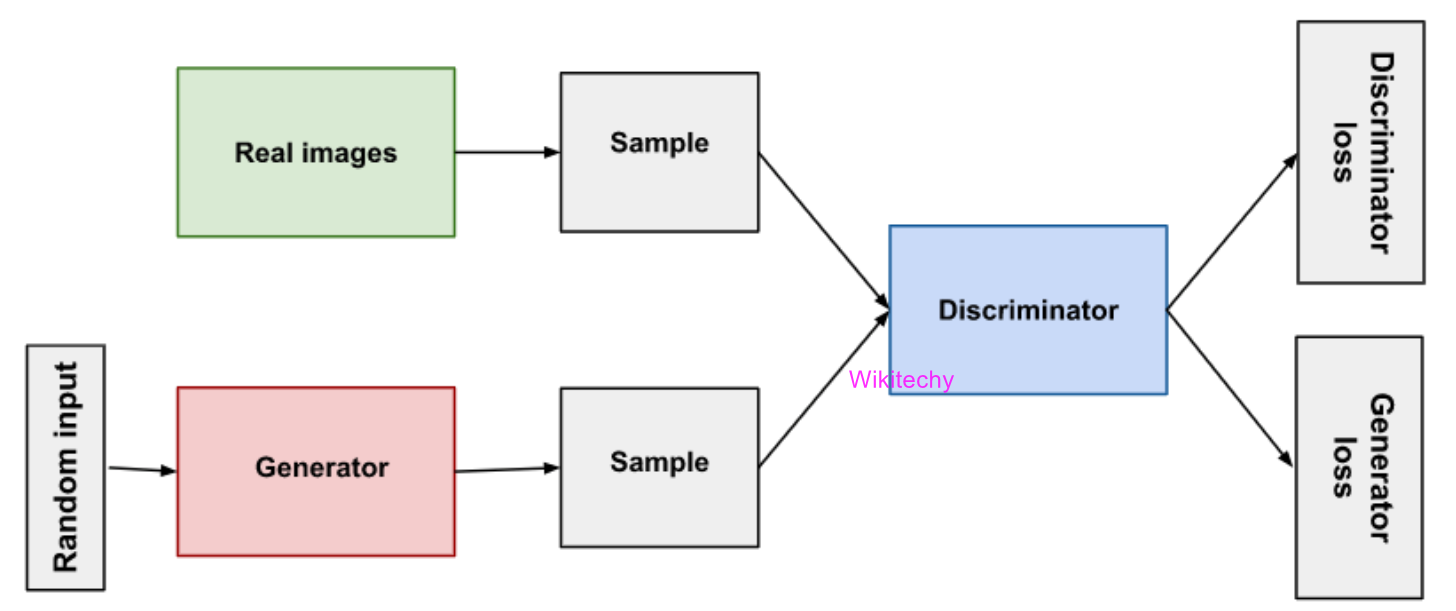

Generative Adversarial Networks (GANs)

- Generative Adversarial Network are defined as deep learning algorithms that are used to generate new instances of data that match the training data.

- It is usually consisting of two components they are, discriminator that adapts itself by learning from this false data and generator that learns to generate false data.

- Some time, GANs have gained immense usage since they are frequently being used to clarify astronomical images and simulate lensing the gravitational dark matter.

- In video games it is also used to increase graphics for 2D textures by recreating them in higher resolution like 4K.

- It is also used in creating realistic cartoons character and also rendering human faces and 3D object rendering.

- In simulation GANs work by understanding and generating the fake data and the real data.

Generative Adversarial Networks (GANs)

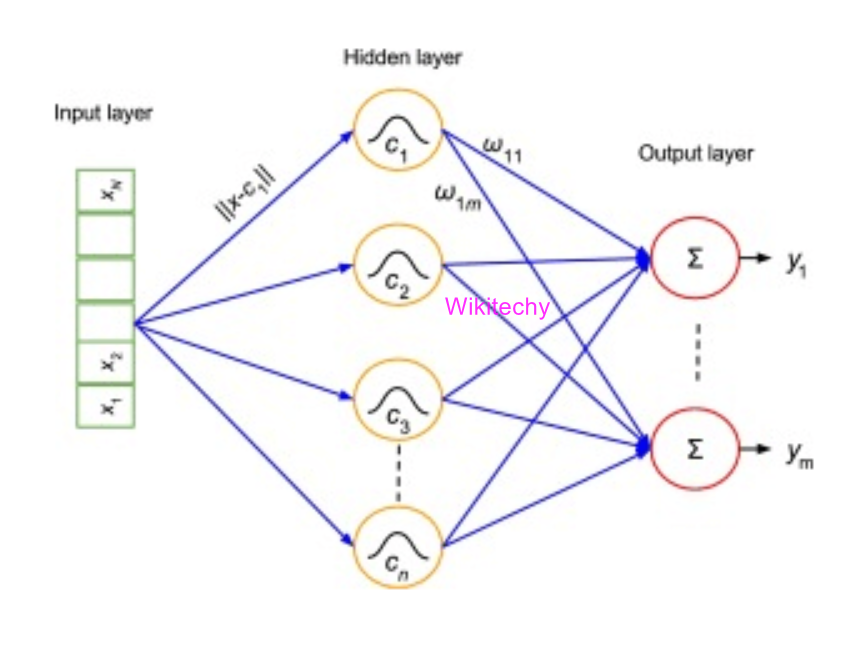

Radial Basis Function Networks (RBFNs)

- Radial Basis Function Networks are specific types of neural networks that follow a feed-forward approach and make use of radial functions as activation functions.

- It consists of three layers which is input layer, hidden layer, and output layer.

- They are mostly used for time-series prediction, regression testing and classification.

Radial Basis Function Networks (RBFNs)

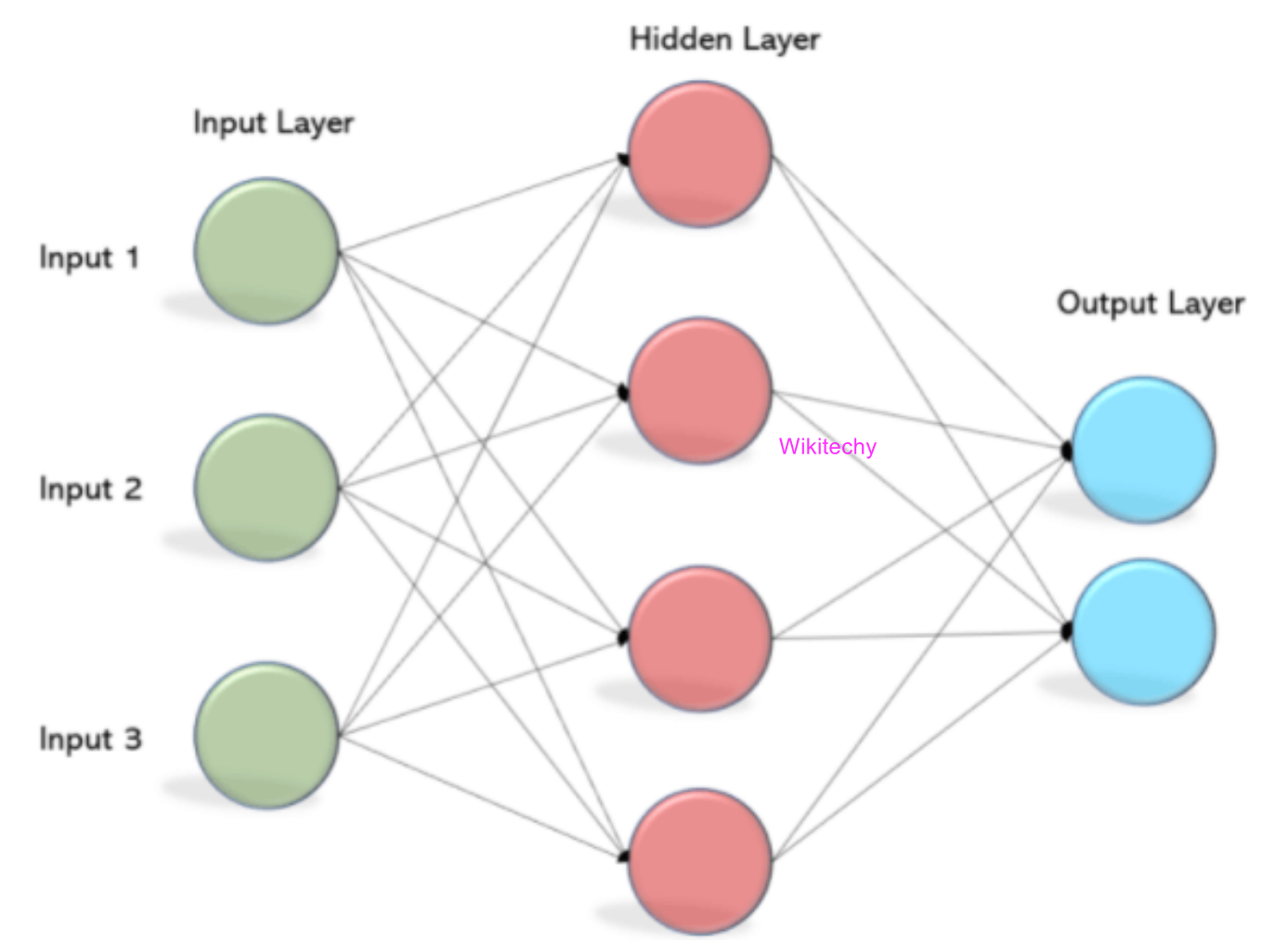

Multilayer Perceptrons (MLPs)

- Multilayer Perceptrons are the base of deep learning technology and it belongs to a class of feed-forward neural networks having various layers of Perceptrons.

- They also have connected input and output layers and their number is the same and also here's a layer that remains hidden amidst these two layers.

- They are mostly used to build image and speech recognition systems or some other types of the translation software.

- The working of MLPs starts by feeding the data in the input layer and neurons present in the layer form a graph to establish a connection that passes in one direction.

- It uses activation functions to determine which nodes are ready to fire.

- They are mainly used to train the models to understand what kind of co-relation the layers are serving to achieve the desired output from the given data set.

Multilayer Perceptrons (MLPs)

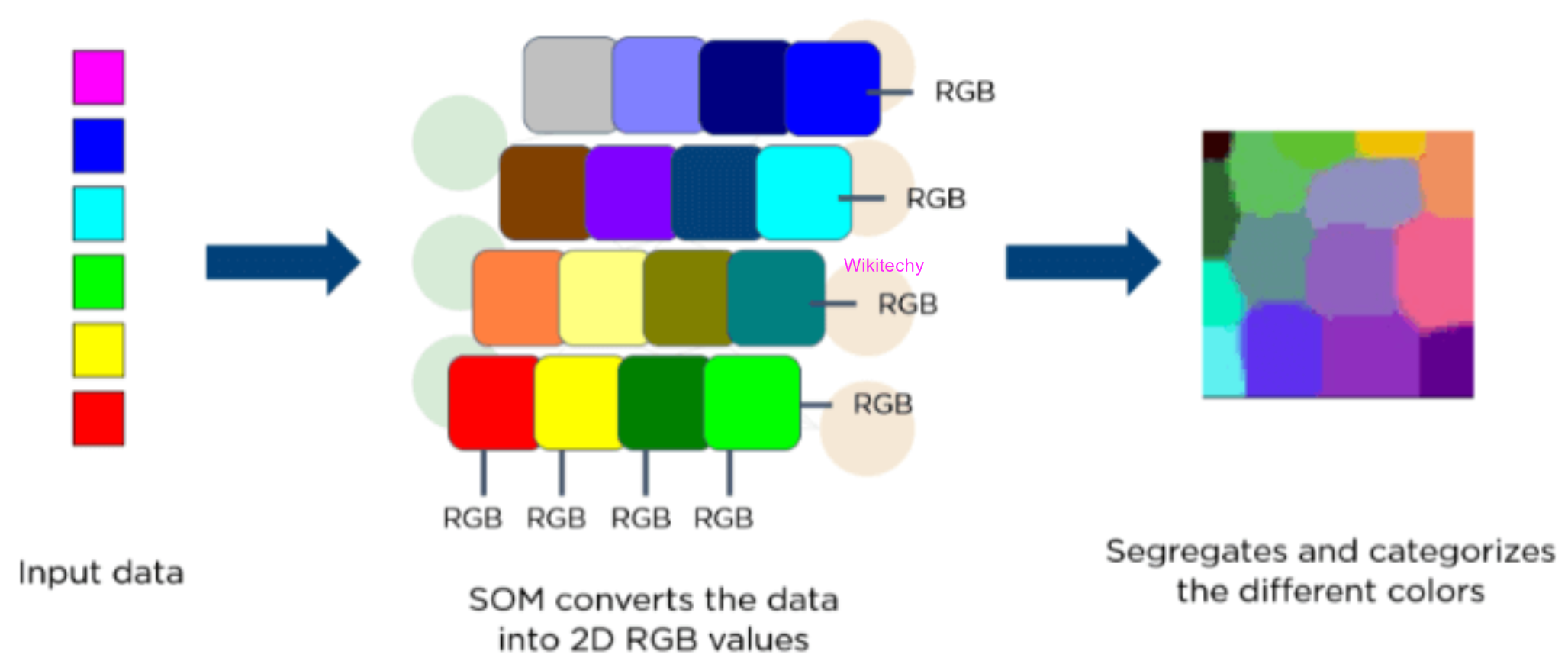

Self-Organizing Maps (SOMs)

- Self-Organizing Maps were invented by Teuvo Kohenen for achieving data visualization to understand the dimensions of data through artificial and self-organizing neural networks.

- The attempts to achieve data visualization to solve problems are mainly done by what humans cannot visualize.

- These data are generally high-dimensional so there are lesser chances of human involvement and of course less error.

- It helps in visualizing the data by initializing weights of different nodes and then choose random vectors from the given training data.

- They examine each node to find the relative weights so that dependencies can be understood.

Self-Organizing Maps (SOMs)

Deep Belief Networks (DBNs)

- Deep Belief Networks are called generative models because they have various layers of latent as well as stochastic variables.

- They are also called Boltzmann Machines because the RGM layers are stacked over each other to establish communication with previous and consecutive layers.

- It is used in applications like video and image recognition as well as capturing motional objects.

- It is learnt from values present in the latent value from every layer following the bottom-up pass approach.

Deep Belief Networks (DBNs)

Restricted Boltzmann Machines

- Restricted Boltzmann Machines were developed by Geoffrey Hinton and resemble stochastic neural networks that learn from the probability distribution in the given input set.

- It is mainly used in the field of dimension regression, reduction and classification, topic modeling and are considered the building blocks of DBNs.

- The functioning of RBMs is carried out by accepting inputs and translating them to numbers so that inputs are encoded in the forward pass.

Restricted Boltzmann Machines