[ Solved -11 Answers] JAVA – Why is it faster to process a sorted array than an unsorted array

A piece of C++ code that seems very peculiar. For some reason, sorting the data miraculously makes the code almost six times faster.

- Without std::sort(data, data + arraySize);, the code runs in 11.54 seconds.

- With the sorted data, the code runs in 1.93 seconds.

Initially, this might be just a language or compiler anomaly. So a Trail in Java.

With a somewhat similar but less extreme result.

- Notice that the data is evenly distributed between 0 and 255.

- When the data is sorted, roughly the first half of the iterations will not enter the if-statement.

- After that, they will all enter the if-statement.

- This is very friendly to the branch predictor since the branch consecutively goes the same direction many times.

- Even a simple saturating counter will correctly predict the branch except for the few iterations after it switches direction.

Quick visualization:

T = branch taken N = branch not taken data[] = 0, 1, 2, 3, 4, … 126, 127, 128, 129, 130, … 250, 251, 252, … branch = N N N N N … N N T T T … T T T … = NNNNNNNNNNNN … NNNNNNNTTTTTTTTT … TTTTTTTTTT (easy to predict)

- However, when the data is completely random, the branch predictor is rendered useless because it can’t predict random data.

data[] = 226, 185, 125, 158, 198, 144, 217, 79, 202, 118, 14, 150, 177, 182, 133, … branch = T, T, N, T, T, T, T, N, T, N, N, T, T, T, N … = TTNTTTTNTNNTTTN … (completely random – hard to predict)

If the compiler isn’t able to optimize the branch into a conditional move,

Replace:

with:

- This eliminates the branch and replaces it with some bitwise operations.

C++ – Visual Studio 2010 – x64 Release

Java – Netbeans 7.1.1 JDK 7 – x64

- With the Branch:There is a huge difference between the sorted and unsorted data.

- With the Hack:There is no difference between sorted and unsorted data.

- In the C++ case, the hack is actually a tad slower than with the branch when the data is sorted.

- The meaning of this particular if… else… branch is to add something when a condition is satisfied.

- This type of branch can be easily transformed into a conditional movestatement, which would be compiled into a conditional move instruction: cmovl, in an x86 system.

- The branch and thus the potential branch prediction penalty is removed.

- In C, thus C++, the statement, which would compile directly (without any optimization) into the conditional move instruction in x86, is the ternary operator … ? … : ….

- So it can be rewritten as:

Thus from the previous fix, lets investigate the x86 assembly they generate. For simplicity, we use two functions max1 and max2.

- max2 uses much less code due to the usage of instruction cmovge

- But the real gain is that max2does not involve branch jumps, jmp, which would have a significant performance penalty if the predicted result is not right

- In a typical x86 processor, the execution of an instruction is divided into several stages.

- Thus they have different hardware to deal with different stages.

- So we do not have to wait for one instruction to finish to start a new one.

- This is called pipelining.

Starting with the original loop:

With loop interchange, we can change this loop to:

if” conditional is constant throughout the execution of the “i” loop, so you can hoist the “if” out:

the inner loop can be collapsed into one single expression, assuming the floating point model allows it

- The Valgrind tool cachegrind has a branch-predictor simulator, enabled by using the –branch-sim=yes flag.

- Running it over the examples in this question, with the number of outer loops reduced to 10000 and compiled with g++, gives these results:

Sorted:

Unsorted:

- Drilling down into the line-by-line output produced by cg_annotate we see for the loop in question:

Sorted:

Unsorted:

[ad type=”banner”]- the problematic line – in the unsorted version the if (data[c] >= 128) line is causing 164,050,007 mispredicted conditional branches (Bcm) under cachegrind’s branch-predictor model, whereas it’s only causing 10,006 in the sorted version.

- On Linux you can use the performance counters subsystem to accomplish The Valgrind tool cachegrind has a branch-predictor simulator, enabled by using the –branch-sim=yes flag, but with native performance using CPU counters.

Sorted:

Unsorted:

- It can also do source code annotation with dissassembly.

- perf record -e branch-misses ./sumtest_unsorted

- perf annotate -d sumtest_unsorted

Percent | Source code & Disassembly of sumtest_unsorted

A common way to eliminate branch prediction is that, to work in managed languages -a table lookup instead of using a branch

- measure the performance of this loop with different conditions:

Here are the timings of the loop with different True-False patterns:

Condition Pattern Time (ms)

(i & 0×80000000) == 0 T repeated 322

(i & 0xffffffff) == 0 F repeated 276

(i & 1) == 0 TF alternating 760

(i & 3) == 0 TFFFTFFF… 513

(i & 2) == 0 TTFFTTFF… 1675

(i & 4) == 0 TTTTFFFFTTTTFFFF1275

(i & 8) == 0 8T 8F 8T 8F … 752

(i & 16) == 0 16T 16F 16T 16F … 490

- A “bad” true-false pattern can make an if-statement up to six times slower than a “good” pattern!

- Thus which pattern is good and which is bad depends on the exact instructions generated by the compiler and on the specific processor.

- The way to avoid branch prediction errors is to build a lookup table, and index it using the data

- By using the 0/1 value of the decision bit as an index into an array, we can make code that will be equally fast whether the data is sorted or not sorted.

- Our code will always add a value, but when the decision bit is 0, we will add the value somewhere we don’t care

This code wastes half of the adds, but never has a branch prediction failure.

- The technique of indexing into an array, instead of using an if statement, can be used for deciding which pointer to use.

- A library that implemented binary trees, and instead of having two named pointers (pLeft and pRight or whatever) had a length-2 array of pointers, and used the “decision bit” technique to decide which one to follow.

- For example, instead of:

- In the sorted case, you can do better than relying on successful branch prediction or any branchless comparison trick: completely remove the branch.

- The array is partitioned in a contiguous zone with data < 128 and another with data >= 128. So you should find the partition point with a dichotomic search (using Lg(arraySize) = 15 comparisons), then do a straight accumulation from that point.

Something like (unchecked)

slightly more obfuscated

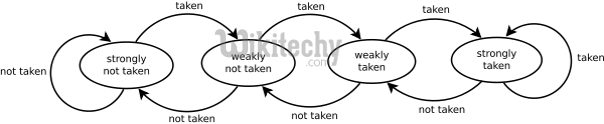

The diagram shows why the branch predictor gets confused.

- Each element in the original code is a random value

- so the predictor will change sides as the std::rand() blow.

- Once it’s sorted, the predictor will first move into a state of strongly not taken and when the values change to the high value the predictor will in three runs through change all the way from strongly not taken to strongly taken.

nice try

nice